2013 Australian aid stakeholder survey. Part 2: and now the bad news

By Stephen Howes and Jonathan Pryke

19 December 2013

In our post yesterday, we reported on the good news from the 2013 Australian Aid Stakeholder survey. Most of the 356 experts and practitioners we surveyed are positive about the effectiveness of Australian aid, and they think that the sectoral and geographic priorities of the program are largely right.

But the survey also reveals that all is not well in the world of Australian aid. The first sign of this actually comes in the results we presented yesterday. On a scale of 1 to 5, the question of how our aid compares to the OECD average in terms of effectiveness scores only 3.3, just above a bare pass. In 2011, when launching the new Australian aid strategy, then Foreign Minister Rudd said that he wanted “to see an aid program that is world-leading in its effectiveness”. Clearly we have a long way to go in realizing that aspiration.

We also asked people about both the 2011 Australian aid strategy and the implementation of that strategy. Respondents are largely satisfied with the strategy (it gets a score of 3.7, which is good given that scores rarely exceed 4), but much less so with its implementation, which gets a score of only 3.2, again close to a bare pass.

The sense that there is an unfinished aid reform agenda comes most clearly from the series of questions we asked about 17 aid program “attributes”. We picked these up from the 2011 Independent Review of Aid Effectiveness. They are factors that are required for an effective and strong aid program, and ones that were identified as important in the Australian context by the Aid Review.

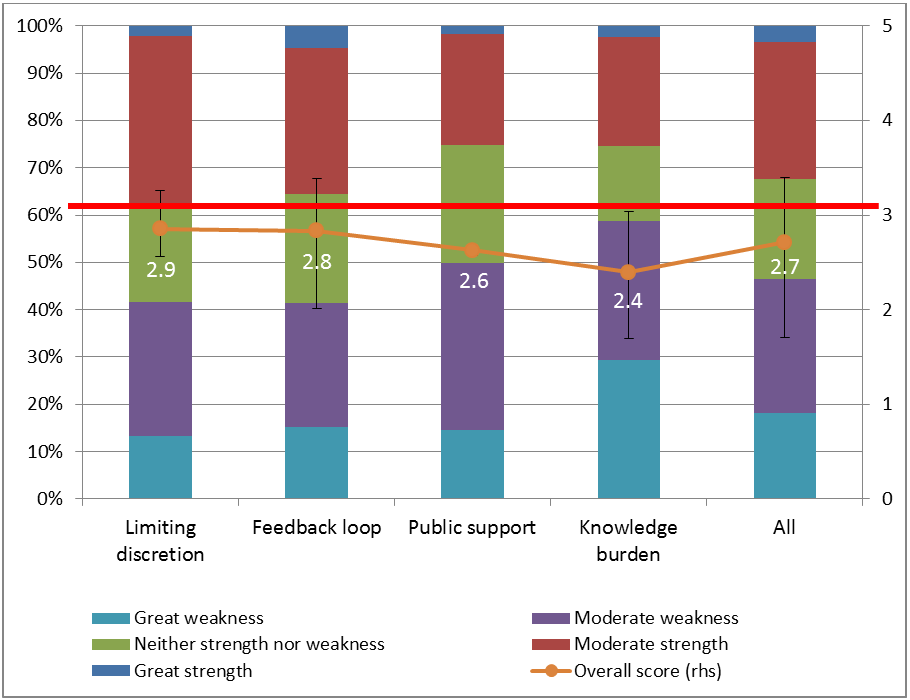

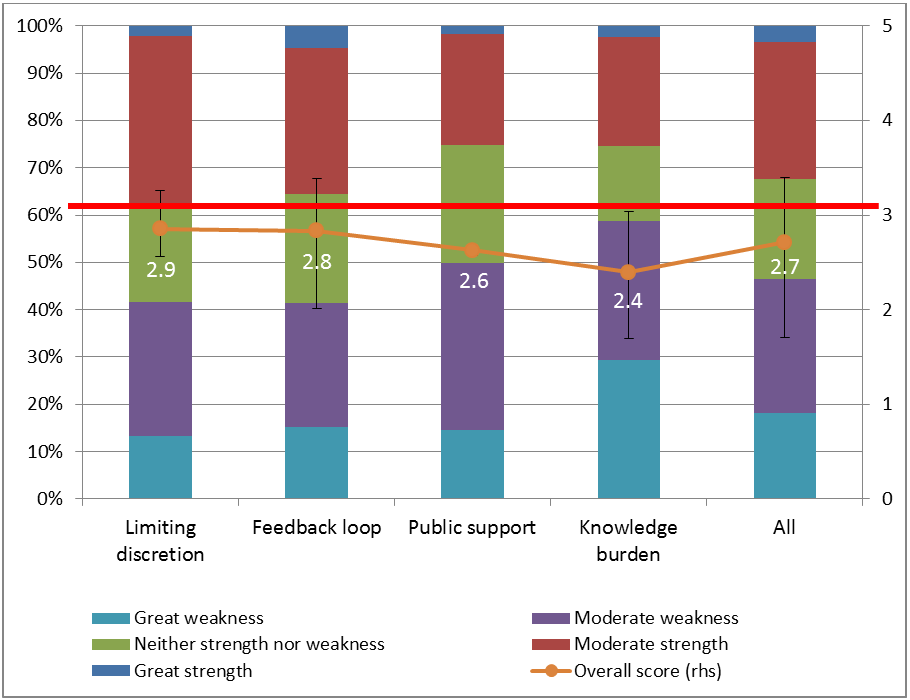

You can find the details on all 17 attributes in our report. They can be grouped into four categories of aid challenges:

- Enhancing the performance feedback loop: ensuring better feedback, and that the systems and incentives are in place to respond to that feedback.

- Managing the knowledge burden: ensuring that you have the staff and the partnerships to cope with the massive knowledge demands (across countries and sectors) that effective aid requires.

- Limiting discretion: ensuring that aid-funded efforts aren’t spread too thin.

- Building popular support for aid: ensuring that political leadership and community engagement is in place.

By taking the average score for the attributes within each of the four categories, we can rate how well the aid program is doing in in each of these four areas. The figure below uses the same method as the graphs in yesterday’s post. The columns show averages by category for the proportion of participants responding with each of the 5 options available in relation to each of the 17 aid program attributes: a great weakness; a weakness; neither a weakness nor a strength; a strength; or a great strength. We also assign each response a score from a great weakness (1) to a great strength (5). Averaging across all respondents gives us an average or overall score, where 5 is the maximum, 1 the minimum and 3 a bare pass – indicated by the red line. These scores are shown by the line graph in the figure below. The error bars show the ranges for individual attributes within each of the four categories.

None of the four categories achieved an average score of 3, a pass mark. The worst-performing was managing the knowledge burden, which achieved a mark of only 2.4, but even the best, limiting discretion, got an average score of only 2.9. The average across all 17 attributes was just 2.7.

None of the four categories achieved an average score of 3, a pass mark. The worst-performing was managing the knowledge burden, which achieved a mark of only 2.4, but even the best, limiting discretion, got an average score of only 2.9. The average across all 17 attributes was just 2.7.

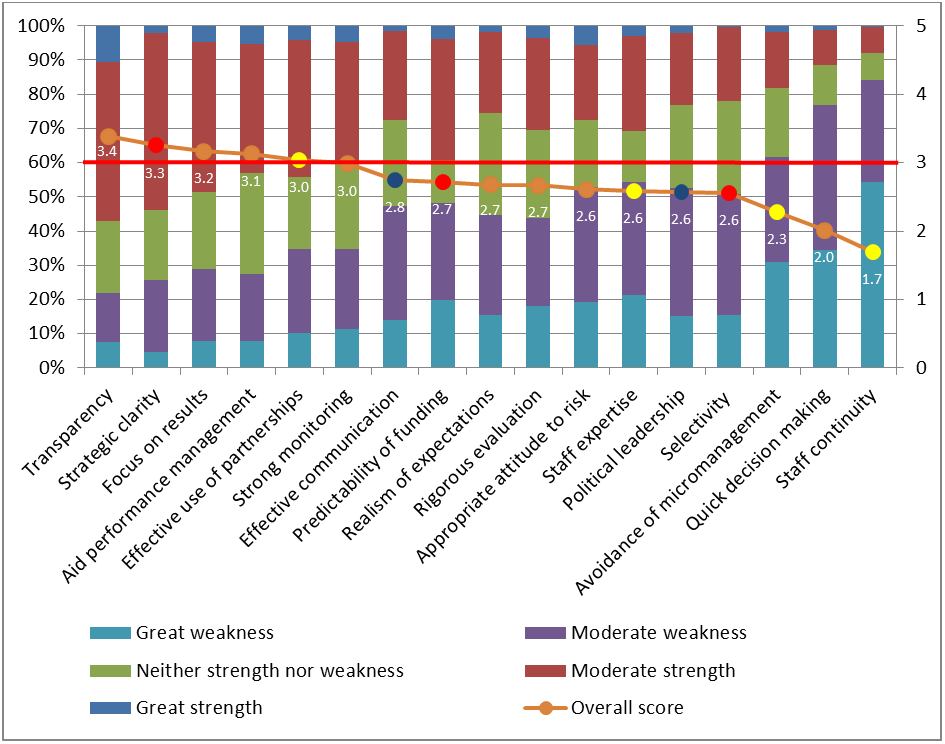

The next graph shows the scores for each of the 17 attributes. The one viewed as the greatest strength of the aid program was transparency, which got a score of 3.4, but only six got a score of 3 or above. 11 got a score below 3. Staff continuity was seen as the biggest weakness with a score of only 1.7.

Note: Tan markers are in the enhancing performance feedback loop category (8 attributes); red are in the limiting discretion category (3 attributes); yellow are in the managing knowledge category (4 attributes); and blue are in the building support category (2 attributes).

Note: Tan markers are in the enhancing performance feedback loop category (8 attributes); red are in the limiting discretion category (3 attributes); yellow are in the managing knowledge category (4 attributes); and blue are in the building support category (2 attributes).

It is interesting to analyse which attributes did better than others, and also to look at differences across stakeholder groups (and all of that is in our report). Overwhelmingly, however, the message from the survey given by all stakeholder groups (whose ratings and rankings are quite similar) is that we have a long way to go on the aid effectiveness front. We might have a pretty good aid program, but the surprisingly low scores for the 17 aid program attributes suggest that we could and should do a lot better.

The 2011 Aid Review’s summary assessment of the aid program was: “improvable but good.” If we had to summarize the assessment of Australia’s aid practitioners and experts, it would be: “good but very improvable.”

This is the real challenge facing the new Government when it comes to aid. On the one hand, it is more than encouraging that the Minister for Foreign Affairs has made a strong personal commitment to progress aid effectiveness. On the other, the perfect storm of the integration of AusAID with DFAT, the budget and staff cuts, and the move away from the strategic aid framework of the previous Government risks undermining effectiveness in the short run, and we are yet to see a new aid effectiveness reform agenda emerge.

Perhaps a repeat of the stakeholder survey in a couple of years’ time will help show us just how much progress there has, or has not, been in tackling Australia’s unfinished aid reform agenda.

Stephen Howes is Director of the Development Policy Centre. Jonathan Pryke is a Research Officer at the Centre.

This is the second in a series of blog posts on the findings of the 2013 Australian aid stakeholder survey. Find the series here.

About the author/s

Stephen Howes

Stephen Howes is Director of the Development Policy Centre and Professor of Economics at the Crawford School of Public Policy at The Australian National University.

Jonathan Pryke

Jonathan Pryke worked at the Development Policy Centre from 2011, and left in mid-2015 to join the Lowy Institute, where he is now Director of the Pacific Islands Program. He has a Master of Public Policy/Master of Diplomacy from Crawford School of Public Policy and the College of Diplomacy, ANU.