The Department of Foreign Affairs and Trade (DFAT) has released its second Performance of Australian Aid report, this one for 2014-15. I commented on the last one (for 2013-14) at last year’s April Australian Aid Evaluations Forum, and I commented on this one at this year’s forum. Unfortunately, I didn’t get time to write up my comments last year, but you can see my presentation here [pdf]. I was quite positive about the 2013-14 report, saying that it was the best of the (I counted) five performance reports about the aid program produced in recent years. I liked the fact that it was timely, and that it used country and project performance data.

The same positive remarks can be made about the new report, and some more. This year’s report has new discussions on partner performance, and more detailed sectoral coverage. Overall, however, I’m less enthusiastic this year, for reasons that will become apparent.

The 2014-15 report is a positive, indeed glowing, assessment of an aid program that emerges as being in the best of health. Of the ten targets, only three haven’t been met. Two are not due to be met until next year, or later, and they are on-track. The third target – relating to gender equity – is out by just a couple of percentage points.

The ten targets are ticked off by page 23. In my analysis last year I paid most attention to these targets, but this year I spent more time reading the text in the remaining 80-odd pages of the report. These go through the rest of the aid program by region, partner and sector. The analysis starts off quite soberly by noting that in some regions one-third or more of aid objectives are at risk of not being met. However, on the sixty subsequent pages the balance of positive to negative remarks is very strongly in favour of the latter. Figure 11 tells us that 45% of program objectives in the Pacific are classified at risk. But the subsequent four pages of text on the Pacific are overwhelmingly positive. I counted four negative comments and 35 positive ones.

The text is thoroughly spun. For example, we are told that an independent evaluation found that the Australia Pacific Technical College (APTC) “has helped improve standards of technical education and training through partnerships with national institutions” (p. 30). There is no mention that the same evaluation found APTC to have an unacceptably low rate of return. TB prevention in Kiribati is going well, and highlighted. TB control in PNG is very problematic, but not mentioned. It might be good that the Mekong River Commission, which we are supporting, organised “a regional consultation process for the Laos Don Sahong hydropower project” (p. 38), but there is no mention that Laos is proceeding with the construction of this dam despite opposition from its neighbours. (You can’t blame the aid program for this, but you can wonder why you’d highlight the process if it didn’t help improve the outcome.)

Not much weight can be put on the text. What about the numbers? A positive feature of the report is that it contains, at the end, an assessment of it by DFAT’s Office of Development Effectiveness (ODE). The ODE note highlights the “unprecedented improvement in the 2015 ratings given to investments against four of the six” criteria used. One reason for this is “changes in the wording” used to define the six-point quality scale by which projects are assessed. Increases in the “proportion of investments rated as satisfactory against different criteria should be treated with caution.” We’re not given more detail, and we are reassured that DFAT will fix the problem next year. But there is the possibility, at least this year, that at least one of the targets alleged to be met in fact hasn’t been.

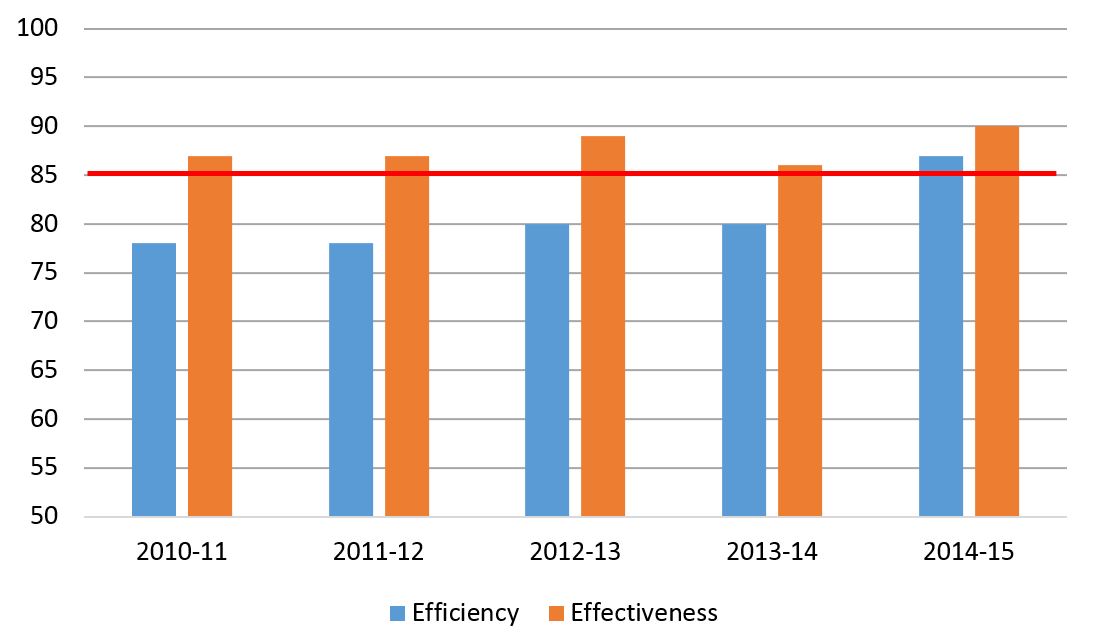

The graph below (not in the report) shows the increase in efficiency and effectiveness this year. Would the 85% efficiency target have been achieved without the changes in definition? From the ODE caveat, it seems unlikely, or at least one can’t be sure. Unfortunately, no explanation is given in the main body of the report for the improvement in efficiency. Indeed, there is no mention that there has been an improvement. Avoiding the issue is hardly the right approach. Even if these changes in rating definitions were justified, one wants something on what they are, why they were made, and what sort of impact they have.

Efficiency and effectiveness ratings over time

Source: 2013-14 and 2014-15 Performance of Australian Aid reports. The red line is the target.

Has underlying efficiency actually improved? We don’t know. Jim Adams, the Chair of the Independent Evaluation Committee, signs off on the report as being “sound and credible”. With so much textual spin and the problems around the ratings, I’m not as convinced.

These objections aside, it is clear that the ten targets will all be met, or close to it. Jim Adams goes on in his comment on the report to make the good suggestion that, given that most of the existing benchmarks have been met, new benchmarks should be selected. The stakeholder survey on Australian aid that we just carried out gives some good guidance on what those new benchmarks should be. Transparency has gone from a perceived strength to a perceived weakness of the aid program. There is one paragraph in the report on transparency, which reiterates the Government’s commitment to “high standards of transparency and accountability.” Given the commitment, and the deterioration in perceived performance, why not make transparency a benchmark? The stakeholder survey also points to overly-rapid staff rotation as a massive (perennial) problem, as well as to declining staff expertise as an increasingly worrying one.

A more balanced text and some more challenging targets would make for a more balanced appraisal next year, and, ultimately, a more effective aid program.

Stephen Howes is Director of the Development Policy Centre. You can listen to the podcast of April’s Australian Aid Evaluations Forum here, and view the presentations here. Scott Dawson of DFAT spoke and explained the changes in ratings. The Forum also featured a discussion of the recent scholarships and women’s leadership evaluation.

The credibility of any government department’s evaluation of its own efficiency and effectiveness must be seen as liable to be skewed by a clear interest in self preservation of those who currently are in charge.

Given that a Senate report on overseas aid was tactically released after the recent election was called and Parliament prorogued is a good indication of how any constructive suggestions will be lost and ignored by those who are very happy, thank you, with the status quo.

The combination of the Performance of Australian Aid report and the specific country performance reports and their timely publication is certainly welcome and moves ODA reporting in the right direction. However, as you imply Stephen, the reporting of the fairly vague process quality scores and benchmark scores does not leave the reader, inside or outside DFAT, with a clear picture of what the aid program is achieving and what needs to be done to further improve performance.

I think that the next steps required to enhance the Performance of Australian Aid report are:

– a summary of qualitative and quantitative OUTCOMES (eg how many children educated, change in child mortality rate, change in export sales) against each of the country and multilateral program objectives and their targets and a whole of program table which sums up the quantitative outcomes of the program (as in Appendix 5 of the 2013-14 DFAT Annual Report). Readers need to know what the program is achieving, not just the quality of its processes.

– a plain text summary of outcomes, challenges and plans for the ten biggest country or multilateral programs so readers come away with more of a feel for the program and DFAT is in a better position to explain the aid program.

– bringing the publication date forward by a month so that informed external feedback can be incorporated into the development of the next year’s aid budget.