Numbers, trends or norms: what changes Australians’ opinions about aid?

By Terence Wood

18 February 2016

When the Australian government cut aid last year, Australians didn’t exactly race to the barricades. In fact, many actually seemed quite happy. When we commissioned a survey question about the 2015-16 aid cuts, the majority of respondents supported them.

Since then, we’ve started studying what, if anything, might change Australians’ views about aid. There’s an obvious practical reason for this: helping campaigners. Yet the work is intellectually interesting too – a chance to learn more about what shapes humans’ (intermittent) impulse to aid distant strangers.

To study what might shift Australians’ attitudes to aid we used survey experiments designed to learn whether providing different types of information changes support for aid. To date we’ve run three separate experiments, each involving about a thousand people. In each experiment about half of the participants (the control group) was asked a simple question about whether Australia gives too much or too little aid – this question stayed the same in all three experiments. The control group were just asked this basic question, with no additional information.

In each experiment the other half of the participants (the treatment group) were asked an augmented question, which involved the basic question plus some information on Australian aid giving. People were randomly allocated to treatment and control groups, which means that (to simplify just a little) any overall difference between the responses of the treatment and control groups has to have been a result of the extra information the treatment group was given.

In the first experiment people in the treatment group were given information on how little aid Australia gives as a percentage of total federal spending. In the second experiment the treatment group was shown how Australian aid as a share of Gross National Income (GNI) has declined over time. In the third experiment declining Australian aid was contrasted with rapidly increasing aid levels in the UK. (The full wording of all the questions is here.)

Before you read on to the results think about which of the additional information contained in the different experiments you think would be most likely to change people’s minds.

For what it’s worth, I was confident that the information in Experiment 1, on just how little aid Australia gives, would shift opinion a lot. Last year, as we studied publicly available surveys on aid, my colleague Camilla Burkot found what I like to call the ‘aid ignorance effect’: people who don’t know how much aid Australia gives, or who overestimate how much is given, are more likely to favour aid cuts than their better-informed compatriots. This problem, I thought, could be easily cured by explaining how little Australia gives. I also thought that telling people about trends in Australian aid over time might be even more effective, by providing a frame of reference for what Australia could do: what it had done in the past. On the other hand I thought the comparison with the UK would add little: if there’s one thing I’ve learnt since moving to Australia, it’s that Australians are not interested in what the British do.

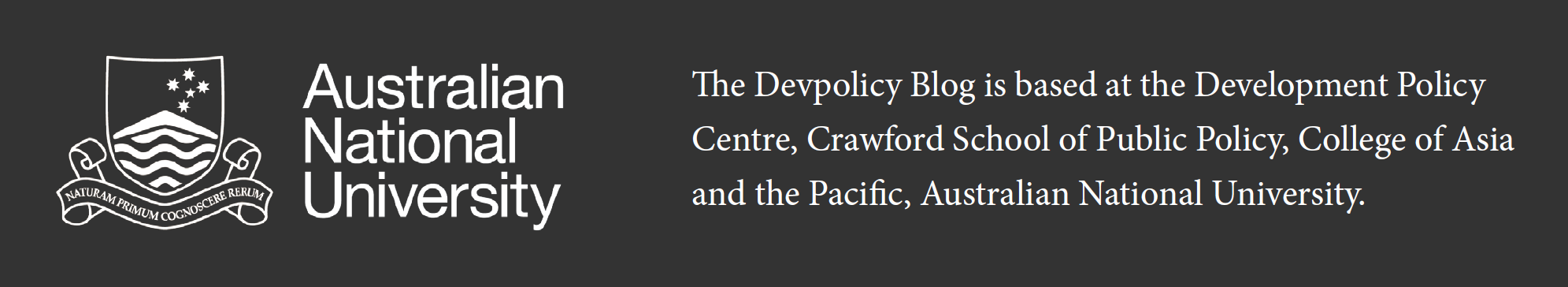

The charts show the results of the experiments. Blue bars show the percentage of control group respondents in each response category. Red bars do the same for the treatment group. If the treatment has had an effect the blue and red bars will differ considerably in their length.

Experiment 1 – telling people how little Australia gives

The first chart shows us that I was utterly wrong: telling people just how little aid Australia gives changed nothing. There was a slight increase in the proportion of respondents who thought Australia gave too little aid, but the change was very small, and wasn’t statistically significant. So much for curing the ignorance effect.

The first chart shows us that I was utterly wrong: telling people just how little aid Australia gives changed nothing. There was a slight increase in the proportion of respondents who thought Australia gave too little aid, but the change was very small, and wasn’t statistically significant. So much for curing the ignorance effect.

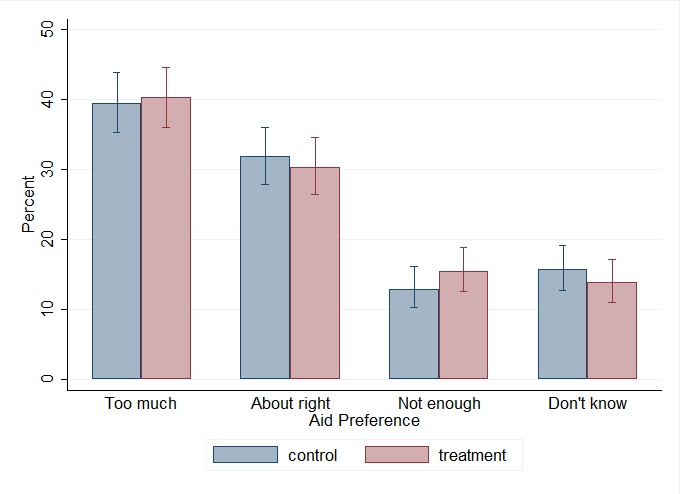

Experiment 2 – information on trends in Australian aid over time

Adding information on trends in Australian aid over time had a slightly larger impact, reducing the percentage of respondents who said Australia gives too much aid by about five percentage points. This change was quite close to being statistically significant once I corrected for an imbalance between the treatment and control groups. Even so, the magnitude of the effect was modest. Adding past giving as a frame of reference may help, but its impact isn’t huge.

Experiment 3 – comparisons with the UK

On the other hand, the impact of contrasting Australia to the UK is startling. Many more respondents thought Australia gave too little, and a lot fewer thought it gave too much.

On the other hand, the impact of contrasting Australia to the UK is startling. Many more respondents thought Australia gave too little, and a lot fewer thought it gave too much.

While the result clearly shows just how little I know about the Australian psyche, unfortunately it doesn’t – I think – give campaigners a magic bullet. The UK has not been a typical donor in the last decade. And if you start a debate contrasting Australia with the UK, you may find your opponents countering with examples of less generous countries, like New Zealand or the United States.

Taken together, however, the three experiments do provide some broader insights into how people think about aid volumes, and what might change their thinking. Specifically, information on its own is meaningless without a frame of reference, or point of contrast. I had thought that, in a country that has become less generous with time, Experiment 2 might have provided this, but actually – perhaps because Australians don’t want to be seen as bad international citizens – the most effective point of contrast appears to be with what other countries are doing.

Back in Old Blighty, no doubt, Queen Elizabeth would be heartened if she knew her unruly antipodean subjects could be influenced in this way. Here in Australia, however, the finding gives us an important new line of inquiry: learning more about what types of international comparisons work, and why.

[Update: I’ve now published a full Devpolicy discussion paper, which covers relevant literature and includes robustness testing. The results are the same. The paper is here.]

Terence Wood is a Research Fellow at the Development Policy Centre. His PhD focused on Solomon Islands electoral politics. He used to work for the New Zealand Government Aid Programme.

Terence and Camilla also presented this research at the 2016 Australasian Aid Conference – more details available here.

About the author/s

Terence Wood

Terence Wood is a Fellow at the Development Policy Centre. His research focuses on political governance in Western Melanesia, and Australian and New Zealand aid.